In this article, we are going to cover Cloud Build a fully managed Serverless CI/CD solution from the Google Cloud Platform. To kick off, we will start with what even is a CI/CD, what is Cloud Build, what are some common use cases and then move into more advanced topics. Such as Cloud Builders, running bash scripts with npm, and passing artifacts between jobs.

- What is a CI/CD?

- What is Cloud Build?

- Use Cases

- How to use Cloud Builders

- Bash scripts with npm

- How to pass artifacts between jobs

- Create/Store Docker Images

- Regex Build Triggers

Hope you’re ready for some learning! Let’s get into it 😃.

What is a CI/CD?

To be fair CI/CD is actually the combination of two different concepts.

Continuous Integration

The first is called CI (Continuous Integration), a powerful way to continuously build and test your code. Usually, the process is triggered off an update to an SCM (Source Code Manager). After the trigger fires, your CI will begin to build your application and then run tests and other operations against the entirety of your application.

Example Steps:

- The code is cloned from SCM (e.g. GitHub)

- Build scripts, create a new version of your application

- A new version is put through the wringer in the form of a series of automated tests and other application-specific operations

Benefits:

- Automated execution of test suite off SCM trigger

- Fewer production rollbacks due to catching issues early

Continuous Delivery

The second is called CD (Continuous Delivery), a powerful way to continuously deploy your code live to end users or to various environments (e.g. dev, QA, prod). Similar to CI, the process is kicked off by an update to an SCM. Which runs a series of operations which is completely customizable by your team. These operations are usually packaged up into a series of bash scripts (e.g. bash, python) which handle interacting with your service providers.

If you’re interested in diving deeper into python bash scripting which is EXTREMELY powerful. Check out this article, Bashing the bash replacing shell scripts with python. It’s a great article!

Example Steps:

- The code is cloned from SCM (e.g. BitBucket)

- Build scripts, create a new version of your application

- A new version is pushed/synced/uploaded to your hosting provider and replaces the old version of your application

Benefits:

- Automated deployment process (less human errors)

- Release new versions of your application quickly!

The combination of these two concepts, allows developers to now handle both automatically testing their code and automatically deploying their code. All in a single swipe. When you do it in this fashion you give your team the flexibility to have builds break if the code fails some type of testing, prior to being deployed and replacing your live application.

For more information check out, CI/CD Introduction.

What is Cloud Build?

Cloud Build is a continuous build, test, and deploy pipeline service offered by the Google Cloud Platform. Cloud Build falls into the category of a cloud CI/CD (Continuous Integration and Continuous Deployment).

“Cloud Build lets you build software quickly across all languages. Get complete control over defining custom workflows for building, testing, and deploying across multiple environments such as VMs, serverless, Kubernetes, or Firebase.” — Documentation

The point of Cloud Build to a large degree is that it abstracts the “build server” away completely. Saving time and accelerating development efforts.

Use Cases:

Here are some common use cases, we’ve seen at Serverless Guru.

Web Application Testing and Deployment:

Cloud Build with the setup we’ve just shown above is great for building and deploying your web applications. By taking your existing bash scripts which make these deployments locally and putting them into Cloud Build you have the power of automatically starting your CI/CD off pushes to GitHub, BitBucket, or Google Source Repositories.

Backend Testing and Deployment:

If you have backend APIs which are deployed to GCP or AWS then you can leverage your existing automation framework. We love the Serverless Framework for automating AWS deployments. Normally, you would take your backend services and wrap them up into some deployable unit. This is a perfect candidate for Cloud Build as you can have an automated testing step which will break your build if the code that was pushed to git was broken.

That’s the end of this high-level workflow for creating CI/CDs using Cloud Build which are powered by bash scripts. At the end of the day. Once you’re able to run a bash script, the world is your oyster.

How to use Cloud Builders

Cloud Builders are a plug-n-play solution that Google created to make it easy for developers to tap into preconfigured docker containers which support various package managers and other common third-party tools.

Leveraging Cloud Builders means for most use cases you don’t need to ever concern yourself with building containers or more traditionally build servers for your CI/CD. Check out some Cloud Builders:

As you can see above we have everything from git to npm to gcloud. This is easy to overlook, but I would qualify the Cloud Builders as one of the most compelling reasons to use Cloud Build. Here is what using a Cloud Builder looks like:

This is a really simple example of how we are using the npm Cloud Builder to install our project's dependencies. The in the next step we could use the npm Cloud Builder to build our project.

Where npm run build would build our code into a distribution folder for uploading to our static hosting solution. Pretty powerful!

Bash scripts with npm

In this section, we will cover setting up Cloud Build to utilize the npm Cloud Builder alongside our custom bash scripts.

When creating CI/CD pipelines we need to be able to run custom scripts which help extend our builds and catch edge cases when doing heavy automation. Usually, these are bash scripts.

Below is an ideal scenario:

You can see below, we are saying “run this npm script defined in our package.json file and in turn run our install.bash script”.

Why do we configure it this way?

You may be wondering why we even add the npm script and don’t just run the bash script directly. Well this is due to the wanting to leverage the Cloud Builds built in functionality called, Cloud Builders.

In our case, we are using the npm Cloud Builder. Which makes it simple to run npm commands without needing to install node on the server or container running your builds. It just works out of the box.

Cloudbuild.yml:

This an example of what an entire CI/CD pipeline may look like with a series of npm scripts. You can see we install, build, test, and deploy.

Package.json:

The package.json can be thought of as our middleware. Connecting our Cloud Build pipeline, or cloudbuild.yml, to the bash scripts which actually run the commands.

Install.bash:

This is a basic example, all we do is run npm install. However, now that you have access to npm. You can get much more advanced from here.

Perfect, now you’re equipped to mirror this set up into your own CI/CD pipelines using Cloud Build. Good luck, let us know how it goes!

How to pass artifacts between jobs

In this section, we will cover how to pass artifacts between jobs (or steps) when using the Cloud Build service. This is an important topic because it allows us to support quite complex workflows without rolling our own workaround.

Full file:

Below is the full cloudbuild.yaml file detailing what we will cover in this article. The CI/CD pipeline only consists of two build steps for simplicity and staying focused on passing artifacts between build steps. Enjoy.

Volumes:

Cloud Build works by allowing the developer to write a series of steps which define all of the operations to achieve CI or CI/CD. In our case, one of the requirements of our application is that we build jar files and deploy jar files.

Logically these two steps are different enough they should be separated. The benefits of separating these two steps (build and deploy) is that we also have better organization and a much easier path to debugging when things go awry.

What does this look like?

Below you’re seeing the inner workings of how we are handling a CI/CD for one of our clients that has a Java Spring Boot API that we are assisting in the automation and deployment to the cloud.

Notice, the volumes key which is an array. This means we can pass multiple volumes to our build steps which can then be utilized by the next sequential step! Pretty nifty.

In Step #1, we are using a Cloud Builder called, mvn or Maven. To utilize a preconfigured docker container built by Google which has all the dependencies required to run maven commands. We use maven to build our jar files and automatically push them up to the cloud if the CI/CD finishes completely.

We are also using a feature called, entrypoint. Which allows us to leverage the maven container by Google while also giving us the ability to run a bash script directly.

The bash script will then do a clean install and create our jar file underneath the /target folder.

This is perfect, exactly what we want. However, we are missing a critical piece. We don’t have a way to pass that jar file to the Step #2, the deploy step. Therefore, we need to attach a volume to Step #1.

Now we have a volume attached called, jar. Not the most creative name, but it serves it’s purpose (passing a jar files between steps). With the volume now setup we can add a line to our build.bash file which will copy the jar file onto the volume.

Perfect, let’s now take a look at Step #2.

If you notice the name of this step is different than Step #1. Here we are leveraging a preconfigured docker container that Google setup called, gcloud. It allows us to automatically sync our Google Cloud project and authenticate that project without needing to pass any credentials. Now we can immediately execute commands against our GCP resources without any setup. For instance, decrypting files using Google KMS. Something that we all commonly do when building CI/CD pipelines. One less thing to worry about.

We also don’t need to worry about installing all the dependencies that gcloud needs to run which is another reason to try Cloud Build. As now we’ve leveraged maven and gcloud CLI without any complex setup.

Once again, we are using the entrypoint feature to run our bash script called, deploy.bash. This script will be passed a couple of arguments.

Another way to write this, which you may or may not prefer.

The first argument is the path to our bash script. The 2nd, 3rd, and 4th arguments are environment variables. Environment variables are commonly used to make practically any software more dynamic, but it’s especially important when trying to build reusable automation instead of one-off scripts.

We configure environment variables when we create a Cloud Build trigger. If you’re unfamiliar with Cloud Build triggers, check out our other article.

Finally, we attach the volume the same way we did in Step #1. By attaching a volume called, jar.

Now we can add a line to our deploy.bash file which will copy the jar file into our target directory. The target directory in our case was the location we were pointing too for the other deployment code. There was an expectation that we had the jar file at ./target/MyApp-1.0.jar. We are choosing to use a local directory versus referencing the external volume, /jar, because locally developers will not have this volume to pull from.

There we go. We just learned how to pass artifacts between Cloud Build steps in our CI/CD pipelines. Entirely, leveraging Cloud Builders which give us a preconfigured docker container to execute operations on top of without having to worry about all the other headaches involved in most CI/CD solutions currently out there. However, if we ever need to build our own docker containers to run our builds. Cloud Build supports that functionality as well. A topic for another article.

Create/Store Docker Images

In this section, we will focus on leveraging Cloud Build to handle the heavy lifting around creating and storing docker images.

Prerequisites:

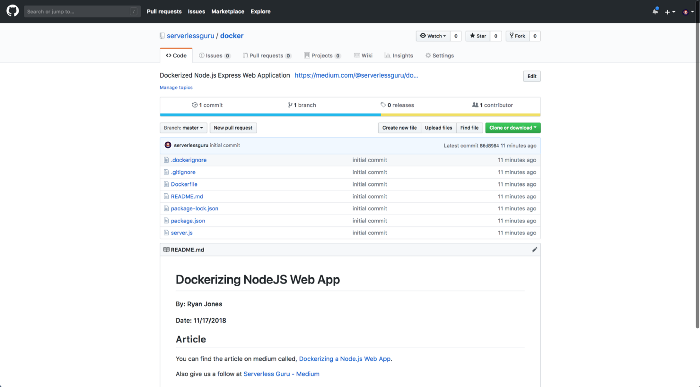

- Github Account

- Github Repository with Dockerfile

- GCP Account

If you don’t have a Dockerfile already then check out our repository. You can fork the repository or just copy the files to follow along with this tutorial.

Navigate to Cloud Build:

Create Trigger:

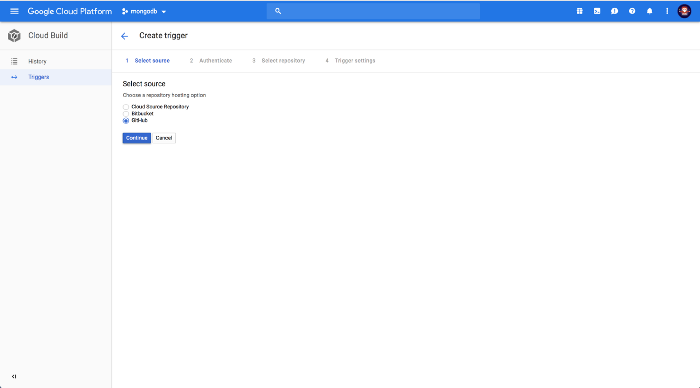

Choose GitHub:

Select Repository:

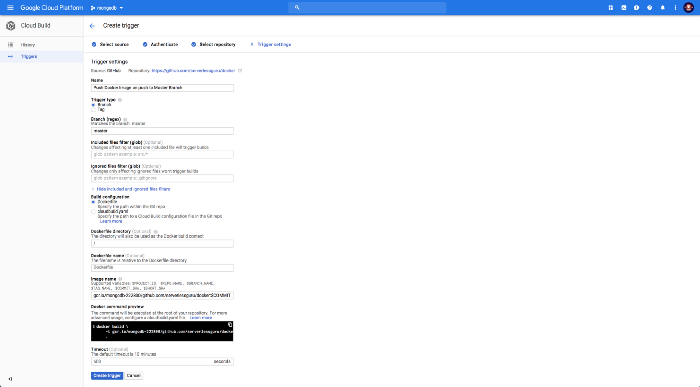

Confirm Trigger:

Trigger your build via git:

Inside your locally cloned version of your application. Go ahead and make a small change then push it up to GitHub. This will kick off the Cloud Build trigger you’ve just created.

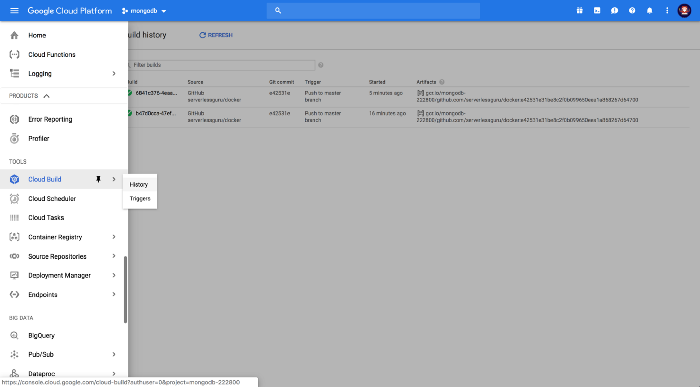

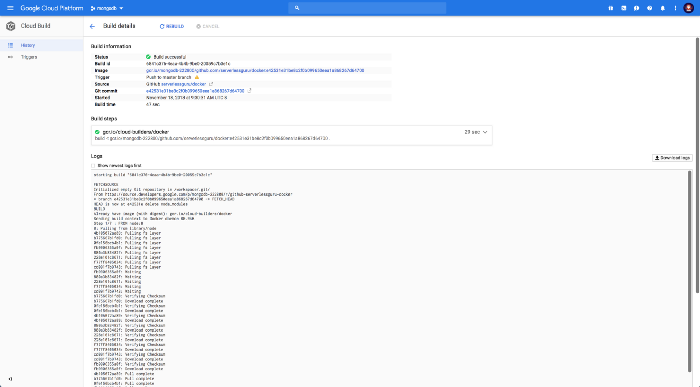

Check build history:

When the build kicks off you will be able to watch the build happen from the Cloud Build History tab.

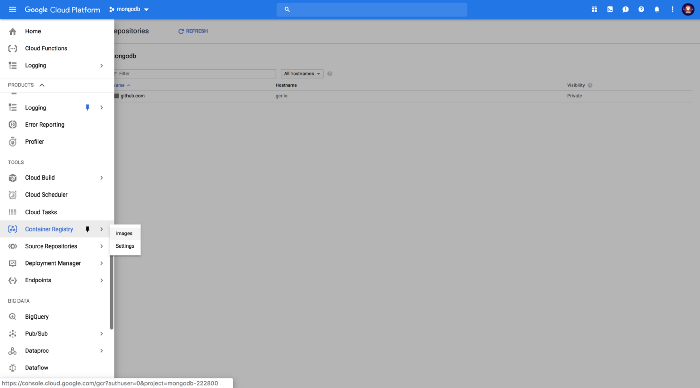

Navigate to container registry:

Container Registry is google drive for container images. Amazon has its own version called, ECR. They both just help store your container images so they can easily be accessible by the supporting cloud provider services. On GCP this would be GKE (Google Kubernetes Engine).

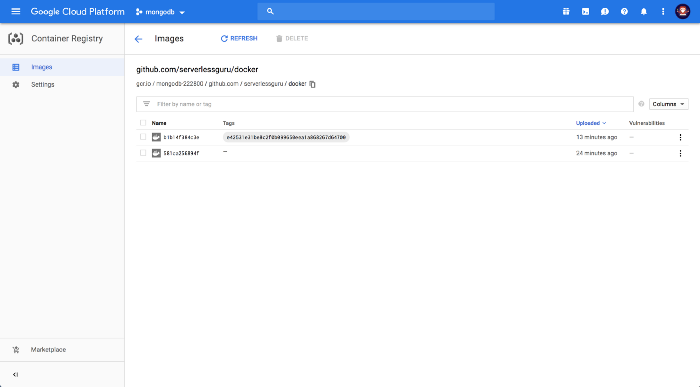

Check out the uploaded container image:

The stored image should reflect your fully built application docker image which was created from your Dockerfile. The hard part is usually getting your container images on the cloud. Cloud Build makes this really simple.

Done 🎉. Powerful and easy. We didn’t have to set up a build server, manage any credentials or any other headaches with other CI/CD pipelines. This was literally dead simple.

Now we have a way for our developers to make changes to the Dockerfile and then push it up to Google Container Registry. All through their normal git workflow!

Regex Build Triggers

In this section, we will be covering how to set up a regex build trigger to only target specific git branches.

Why does this matter?

We had a client who was using a branching strategy that consisted of dev-[dev-name] (e.g. dev-ryan). Which caused Cloud Build to deploy new code to our development application every time one of the devs pushed from any branch that had dev in it. Below, we will show how to lock the Cloud Build trigger to the “dev” branch only and ignore “dev-ryan”.

Open up the Cloud Build console

Navigate to your GCP project and find the Cloud Build service page. Then hit, Triggers.

Connect your GitHub account

We are using GitHub in this example, but as you can see below. Cloud Build supports BitBucket and Google Cloud Source Repository.

Choose your desired Repository

You can filter repositories depending on the size of your GitHub account. I’m using a simple repository that isn’t being used for the setup as we won’t be getting into creating the full CI/CD in this article. If you would like to see that check the articles at the top for more information.

Specify your branch regex

With the regex below or your own more complicated regex we can ensure that our CI/CD is building what we intended. Below, we scope the Cloud Build trigger down only the “dev” branch using ^dev$.

More advanced

Now that we are limiting the deployments to only the dev branch. We can take it further and even have a whole separate trigger on all the dev-[dev-name] branches which will run a completely different build.

Now for instance, if a developer pushed their branch (dev-ryan), then this separate Cloud Build trigger would only build and test. Versus our dev trigger which will handle build, test, and deploy. Allowing for us to reuse the same knowledge, skills, and process to accomplish both a full CI/CD and a CI for feature branches. Awesome 🎉

Note: This is not a recommended branching strategy, but meant to showcase a small feature of Cloud Build which can be quite powerful.

%20(1).svg)

.svg)

.webp)