Creating user-friendly Domain Name System (DNS) names for internal resources can make it easier for the services to be consumed by clients. Identifying a service by a DNS name instead of the service’s IP address has the advantage of keeping things stable as the name can always resolve to a dynamic IP address of the underlying resource. This outcome is the norm in modern cloud architectures where the concept of elasticity leads to resources being spun up and destroyed in response to different conditions such as CPU or memory usage.

For private resources in Amazon VPC’s, custom domain names can be created with Amazon Route 53 Private Hosted Zones (PHZ).

In the first part, we created a PHZ and a custom domain name for an Amazon EC2 instance-based service using a DNS “A” record

In this part, we will accomplish the following:

- Use AWS Transit Gateway for the VPC-to-VPC connectivity necessary for IP layer 3 packet flow.

- Create and share a PHZ with the Client VPC.

- Create a custom domain name for an internal Application Load Balancer (ALB).

- Use an “Alias” record which is unique to Amazon Route 53 and recommended for creating custom domain names for AWS-based services like Load Balancers, API Gateways etc.

Let’s dive into how you can implement this configuration step-by-step using Terraform!

Key Services and Concepts

Amazon Route 53 Hosted Zones

In Amazon Route 53, hosted zones are containers for DNS records, that contain information about how to route traffic for specific domains and subdomains.

Amazon Route 53 Private Hosted Zones (PHZs) are hosted zones in AWS Route 53 that are specifically designed for managing private DNS within a Virtual Private Cloud (VPC) environment. Unlike public hosted zones, which allow DNS queries to be resolved over the internet, PHZs are strictly accessible within the VPCs associated. This makes them ideal for use cases where private resources need to communicate using DNS names but shouldn’t be exposed to the public internet.

Alias Record

Alias records allow you to create records that route traffic from one record in a hosted zone to another DNS record.

Alias records appear to do the same job as standard DNS CNAME records but they are unique to Amazon Route 53. The key difference is the fact that Alias records can be created for the zone apex such as example.com which is not supported by CNAME records.

You can get the full list of Amazon Route 53 supported DNS record types in the official documentation here.

AWS Transit Gateway

AWS Transit Gateway connects your Amazon Virtual Private Clouds (VPCs) and on-premises networks through a central hub. This connection simplifies your network and puts an end to complex peering relationships. Transit Gateway acts as a highly scalable cloud router—each new connection is made only once.

Architecture Overview

Similar to part 1, we have two VPCs - Client VPC and Services VPC. The Client VPC needs to utilize human-friendly DNS names to reach resources in the Services VPC.In this part, the service an web server fronted by an internal Application Load Balancer. Both VPCs are only have private resources and traffic is “east-west”.

The VPC-to-VPC connectivity will still be provided by the AWS Transit Gateway but we will be creating an Alias record to resolve alb.myservices.internal to the Amazon-assigned DNS name of the internal ALB in the format internal-alb-123456890.eu-west-1.elb.amazonaws.com.

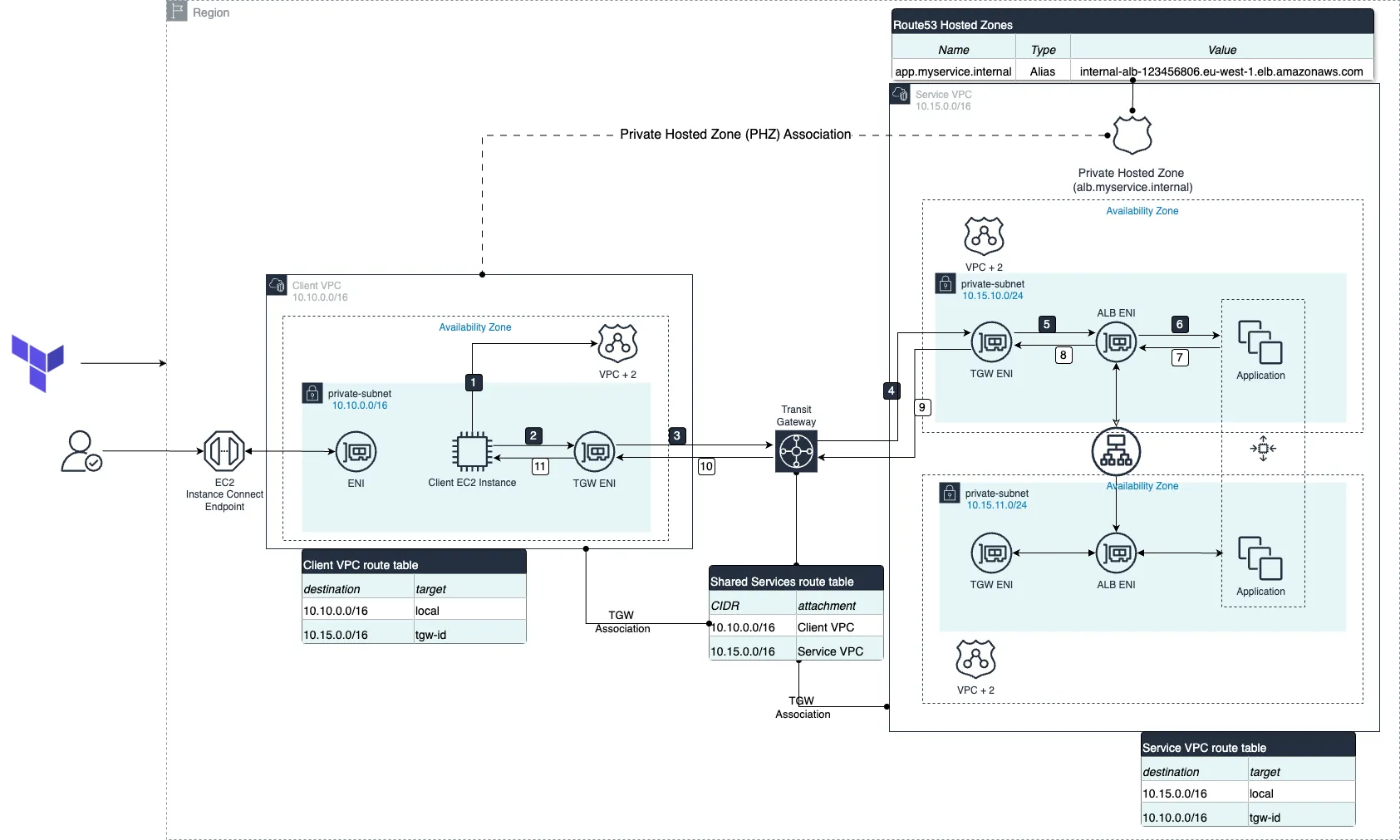

High-Level Architecture Diagram

Walkthrough

An EC2 instance client instance needs to connect to a service exposed by an ALB in the Service VPC via a human friendly DNS name, (app.myservice.internal)

- The EC2 instance first needs to resolve the custom domain name by querying the VPC + 2 resolver. The PHZ for

app.myservice.internalis associated with the Client VPC so the custom domain name will be resolved to an IPv4 address corresponding to one of the ALB’s ENIs. - Once the EC2 instance obtains the IP address from the DNS resolution, it sends the traffic to the TGW’s Elastic Network Interface (ENI) as per its route table.

- The TGW ENI forwards the packet to the TGW.

- As per the TGW Shared Services route table, the packet is forwarded to the Service VPC.

- The TGW ENI in the Service VPC forwards the packet to the corresponding ALB ENI in the Availability Zone.

- The ALB ENI forwards the packet to one of the EC2 targets in its Target Group for the application.

- The response packet from the EC2 target instance is sent back to the ALB ENI.

- The ALB ENI forwards the response to the TGW ENI.

- The packet is sent to the TGW.

- As per the Shared Services route table associated with the Service VPC attachment, the traffic is forwarded to the Client VPC.

- The response packet is sent by the TGW ENI in the Client VPC to the client EC2 instance’s ENI.

Implementation

Prerequisites

To proceed with this tutorial make sure you have the following software installed:

To verify if all of the prerequisites are installed, you can run the following commands:

# check if the correct profile and access key is setup

aws configure

# Easy obtain your AWS Account ID

aws sts get-caller-identity

# check if you have Terraform installed

terraform -vStep 1: Layer 3 Reachability Between Client & Service VPCs

The outcome of a successful DNS lookup is the network address which will be used in the Destination Address field of the forwarded IP packet. In our scenario, IP reachability between the Client and Services VPCs will be established with an AWS Transit Gateway (TGW). The important points to note about the TGW setup are;

- The private subnets of clients in the Client VPC must have a route that permits resources to forward traffic, destined for the services in the Service VPC. And vice-versa, there must be a return route in the Services VPC’s private subnets for response to be forwarded back to the client.

resource "aws_route" "services_to_client" {

count = length(module.services_vpc.private_route_table_ids)

route_table_id = element(module.services_vpc.private_route_table_ids, count.index)

destination_cidr_block = local.client_vpc_cidr

transit_gateway_id = aws_ec2_transit_gateway.main_tgw.id

}

resource "aws_route" "client_to_services" {

count = length(module.client_vpc.private_route_table_ids)

route_table_id = element(module.client_vpc.private_route_table_ids, count.index)

destination_cidr_block = local.services_vpc_cidr

transit_gateway_id = aws_ec2_transit_gateway.main_tgw.id

}

- At the level of the TGW, both Client and Services’ VPCs attachments must be in the same route table. We also install static routes for effective packet forwarding.

resource "aws_ec2_transit_gateway_route_table_association" "service_vpc_association" {

transit_gateway_attachment_id = aws_ec2_transit_gateway_vpc_attachment.service_vpc_attachment.id

transit_gateway_route_table_id = aws_ec2_transit_gateway_route_table.services_rt.id

}

resource "aws_ec2_transit_gateway_route_table_association" "client_vpc_association" {

transit_gateway_attachment_id = aws_ec2_transit_gateway_vpc_attachment.client_vpc_attachment.id

transit_gateway_route_table_id = aws_ec2_transit_gateway_route_table.services_rt.id

}

resource "aws_ec2_transit_gateway_route" "service_to_client" {

destination_cidr_block = local.client_vpc_cidr

transit_gateway_route_table_id = aws_ec2_transit_gateway_route_table.services_rt.id

transit_gateway_attachment_id = aws_ec2_transit_gateway_vpc_attachment.client_vpc_attachment.id

}

resource "aws_ec2_transit_gateway_route" "client_to_service" {

destination_cidr_block = local.services_vpc_cidr

transit_gateway_route_table_id = aws_ec2_transit_gateway_route_table.services_rt.id

transit_gateway_attachment_id = aws_ec2_transit_gateway_vpc_attachment.service_vpc_attachment.id

}

Step2: Define the Custom Domain

The next step is to create a Route 53 Private Hosted Zone (PHZ) with a custom domain name, which in our case will be myservice.internal. This PHZ will allow us to set up DNS names that are only accessible within the associated VPCs, ensuring secure and isolated name resolution for private internal services.

- Create an Amazon Route 53 PHZ in the Services VPC.

resource "aws_Route 53_zone" "phz" {

name = var.service_name

vpc {

vpc_id = module.services_vpc.vpc_id

}

comment = "Private hosted zone for ${var.service_name}"

tags = merge(local.common_tags, {

Name = "${var.service_name}-phz"

})

lifecycle {

create_before_destroy = true

ignore_changes = [ vpc ]

}

}Just like with “A” Records, AWS will automatically create a set of name servers for the hosted zone, that are only reachable from associated VPCs.

Next, we will create an Amazon Route 53 Alias record.

Step 3: Create the DNS Record

- Create an Amazon Route 53 Alias record to map the Amazon DNS allocated name of the internal ALB to our user-friendly DNS name,

app.myservice.internal.

resource "aws_Route 53_record" "alb_alias" {

zone_id = aws_Route 53_zone.phz.id

name = "alb.${var.service_name}"

type = "A"

alias {

name = aws_lb.app_alb.dns_name

zone_id = aws_lb.app_alb.zone_id

evaluate_target_health = true

}

}

Step 4: Share the Private Hosted Zone with the Client VPC

To enable DNS-based communication between for clients in the Client VPC, we’ll need to associate the Route 53 PHZ created in step 2 with the Client VPC. By doing this, DNS queries originating in the Client VPC will be able to resolve the Alias record of the ALB in the private hosted zone.

- Associate Client VPC with PHZ

resource "aws_Route 53_zone_association" "client_vpc_association" {

zone_id = aws_Route 53_zone.phz.id

vpc_id = module.client_vpc.vpc_id

lifecycle {

create_before_destroy = true

}

}

Now, clients in the Client VPC will be able to use app.myservice.internal to reach the service in the Services VPC.

Deployment

Clone the repository

git clone https://github.com/FonNkwenti/tf-Route 53-phz-cross-vpc.git

cd tf-Route 53-phz-cross-vpc/

Open the Directory to the A_Record Project

Go to the A_Record directory.

tf-Route 53-phz-cross-vpc git:(main) ✗ cd Alias_record/- Update the

variables.tfwith your variable preferences or leave the defaults. - Update the

locals.tfwith your own local variables or work with the defaults. - Create a

terraform.tfvarsfile in the root directory and pass in values for some of the variables.

main_region = "eu-west-1"

account_id = 123456789123

environment = "dev"

project_name = "tf-Route 53-phz-cross-vpc"

service_name = "myservice.internal"

cost_center = "237"

ssh_key_pair = "my_key_pair"

service_ami = "ami-12345674f06d663d2"

Remember to provide the AMI Id for an existing AMI that has your application code. Refer to the Creating an AMI from an Amazon EC2 Instance tutorial in you don’t have an existing AMI. Any AMI created from an EC2 HTTP server that can be reached via HTTP on port 80 will suffice. This is crucial since we will be deploying the service in a VPC that doesn’t have internet access.

Initialize Terraform.

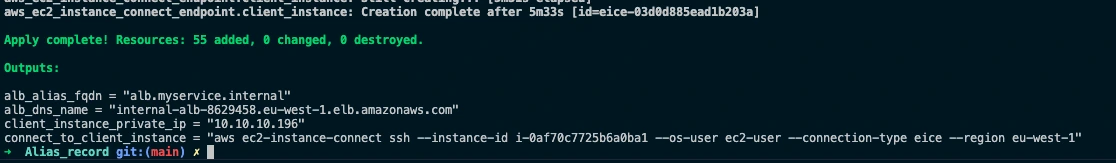

A_record git:(main) ✗ terraform initDeploy the project.

A_record git:(main) ✗ terraform apply --auto-approveCopy the output values after the deployment is completed. We will use them to test the cross VPC DNS resolution.

Testing DNS Resolution from the Client VPC

We will use EC2 Instance connect Endpoint to privately connect to the Client EC2 instance in the Client VPC to run our tests.

To ensure everything is set up correctly, we’ll test DNS resolution from an instance within the Client VPC. This step verifies that the instances in the Client VPC can resolve the custom DNS records created in the PHZ and can connect to the internal ALB in the Services VPC via the TGW.

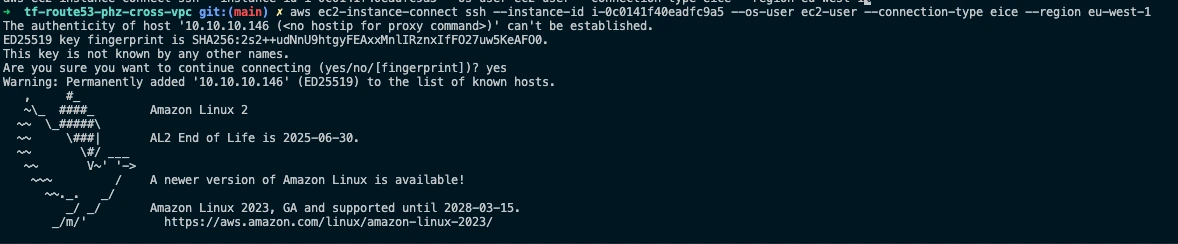

1. Log In to the Instance in the Client VPC:

- Copy the value of the

connect_to_client_instanceTerraform output and enter the command in your terminal to create a secure SSH connection to the EC2 Instance Connect private endpoint.

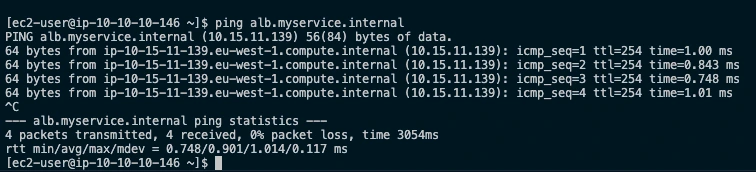

2. Test DNS Resolution:

- Use DNS commands like

ping,nslookupandcurlto verify that the custom DNS name resolves correctly to the server’s private IP. - Using

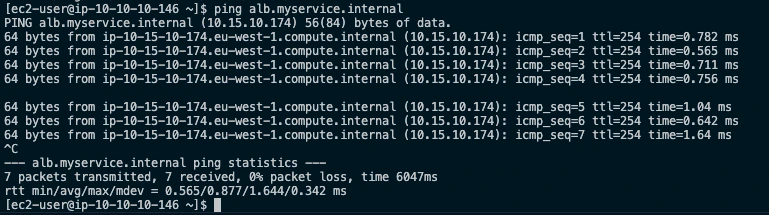

ping:

We launch 2 successful ping commands and you can see that difference EC2 instances responded for each ping which shows that the custom domain name is working.

- Using

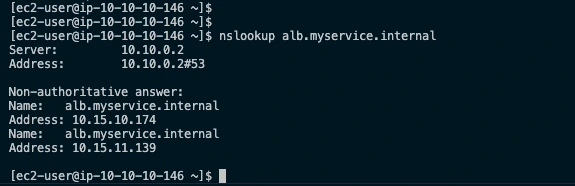

nslookup(for more detailed DNS resolution info):

The successful output of nslookup shows two non-authoritative IPv4 addresses.

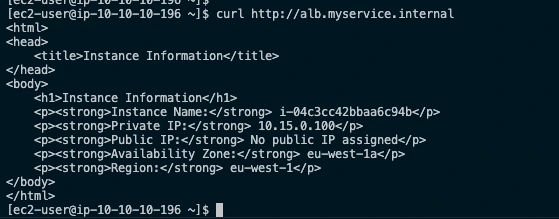

- Using

curl:

With curl, we can successfully download the webpage for the web app.

Troubleshooting Tips

- Invalid AMI ID

- If you do not already have an AMI, make sure you follow the **Creating an AMI from an Amazon EC2 Instance** tutorial to create one.

- If you have an AMI, make sure you copy the AMI Id and update the service_ami variable accordingly.

- Ensure that the project is deployed in the same AWS Region as your EC2 AMI.

- DNS Resolution Fails:

- Ensure the private hosted zone is associated with both the Server and Client VPCs.

- Check that DNS resolution is enabled in both VPCs. Since we are using the

terraform-aws-modules/vpc/awsopen source modules to create the VPCs, ensure that the values forenable_dns_hostnamesandenable_dns_hostnamesare set totrue.

- Connectivity Issues:

- Use the AWS Network Manager’s [Reachability Analyzer](https://docs.aws.amazon.com/vpc/latest/reachability/what-is-reachability-analyzer.html#:~:text=Reachability Analyzer is a configuration analysis tool that,destination resource in your virtual private clouds (VPCs).) to pinpoint where there is a connectivity failure along the network path between the client instance and the service instance.

- Verify that the security groups and network ACLs on both the client and server instances allow traffic between the VPCs.

- Make sure VPC peering connections are properly set up, and relevant routes are added to route tables.

- Terraform Deployment Issues:

- Make sure you are using a valid SSH Key pair in the region where you are deploying the project as this step is not explicitly described in this tutorial.

Clean Up Resources

Remove all resources created by Terraform in the Service Consumer's account

- Navigate to the

A_Recorddirectory:

cd tf-Route 53-phz-cross-vpc/Alias_record/- Destroy all Terraform resources:

terraform destroy --auto-applySummary and Key Takeaways

In this tutorial, we walked through how to set up cross-VPC DNS resolution using Amazon Route 53 Private Hosted Zones (PHZ). By creating a PHZ, associating it with both the Client and Server VPCs, and adding custom DNS records, we enabled instances in a Client VPC to access resources in the Server VPC using easy-to-remember custom DNS names. Here’s a quick recap of the process:

- VPC-to-VPC Connectivity: We established layer 3 connectivity with a AWS Transit Gateway.

- Private Hosted Zone Setup: Created a Route 53 PHZ in the Services VPC and associated it with the Client VPC.

- Custom DNS Records: We created a Route 53 Alias record for our internal load balancer’s automatically assigned DNS name.

- Testing: We successfully verified the setup by testing DNS resolution from the Client VPC using standard networking commands like

ping, andnslookupandcurl.

Other than EC2 instances and Application Load Balancers, you can use PHZ’s to create custom domain names for all the other Load Balancers types (Classic ELB, NLB, GWLB, etc) and other AWS resources such as VPC interface endpoints, Private API Gateway Endpoints etc.

Here is the complete list of Amazon Route 53 Supported DNS record types.

.webp)

%20(1).svg)

.svg)